Chapter 1.8 - Understanding Docker Network Modes

Understanding Docker Network Modes

Docker provides several network modes that determine how containers connect to the host machine and to each other. Understanding these modes is crucial for designing robust and efficient containerized applications.

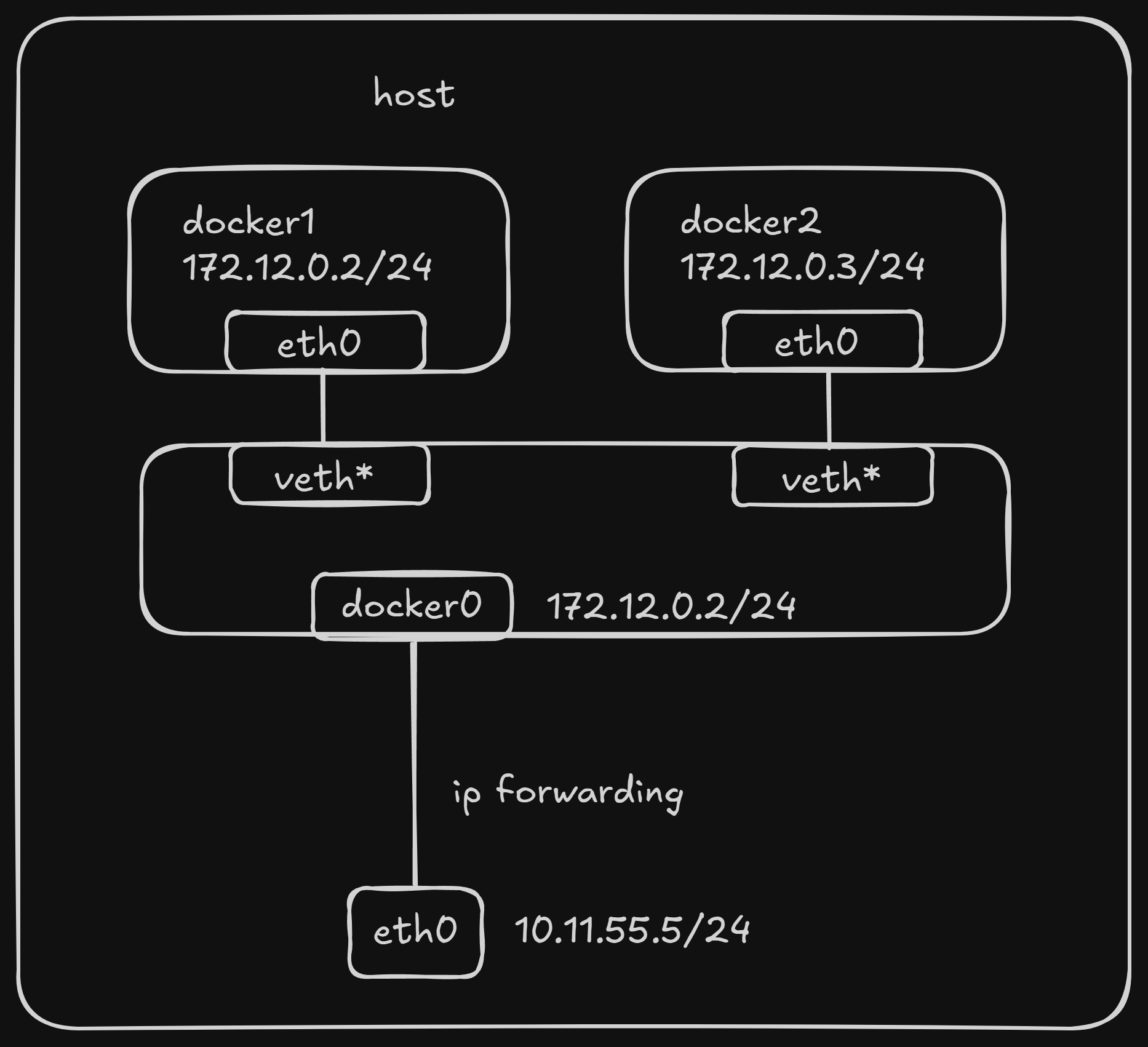

1. Bridge Mode (Default)

The Bridge network mode is the default and most commonly used networking option for Docker containers.

How it Works:

When the Docker daemon starts, it creates a virtual bridge named docker0 on the host machine. All Docker containers launched on this host by default connect to this virtual bridge. This virtual bridge functions similarly to a physical network switch, connecting all containers on the host machine into a Layer 2 network.

- Each new container is assigned an IP address from the

docker0subnet. - The

docker0bridge’s IP address is set as the default gateway for the containers. - Docker creates a pair of virtual Ethernet devices called

veth pair. One end of theveth pair(namedeth0) is placed inside the new container, acting as the container’s network interface. The other end, typically namedvethxxxx(e.g.,vethabc123), remains on the host and is attached to thedocker0bridge.

This setup allows containers to communicate with each other on the same host and with the outside world (via NAT).

Port Publishing (-p / –publish):

When you use docker run -p <host_port>:<container_port> (or --publish), Docker automatically configures Destination Network Address Translation (DNAT) rules in the host’s iptables. These rules forward incoming traffic from the specified host port to the corresponding port on the container, making the container’s service accessible from outside the host. You can inspect these rules using sudo iptables -t nat -vnL.

Visual Representation:

Demonstration:

-

Run Containers in Default Bridge Mode:

By default, if you don’t specify the

--networkparameter, Docker uses the bridge network mode. We’ll use a basic alpine image for these examples, which is small and common.docker run -d --name docker_bri1 alpine sleep 3600 docker run -d --name docker_bri2 alpine sleep 3600docker run -d: Runs the container in detached mode (in the background).--name: Assigns a readable name to the container.alpine: The Docker image to use.sleep 3600: Keeps the container running for 1 hour.

-

Inspect the docker0 Bridge (on the host):

You can view the docker0 bridge and connected veth interfaces using Linux network tools.

# You might need to install bridge-utils and net-tools if not already present # For Debian/Ubuntu: sudo apt-get update && sudo apt-get install bridge-utils net-tools # For CentOS/RHEL: sudo yum install bridge-utils net-tools sudo brctl showThis command shows the

docker0bridge and thevethinterfaces (e.g.,vethxxxx) that connect your containers to it. -

Inspect Container Network Configuration:

Access one of the containers and check its network settings.

docker exec -it docker_bri1 shInside the

docker_bri1container, execute:/ ip a / ip rip a: Shows network interfaces and assigned IP addresses (e.g.,eth0).ip r: Displays the routing table, showingdocker0’s IP as the default gateway.

Type

exitto leave the container’s shell.

Custom Bridge Networks (Recommended for Multi-Container Communication):

While the default bridge network allows containers on the same host to communicate by their IP addresses, using custom bridge networks is the highly recommended approach for inter-container communication. Custom networks provide better isolation, automatic DNS resolution for container names, and easier management compared to the legacy --link parameter.

Demonstration with Custom Bridge Network:

-

Create a New Custom Docker Network:

This creates a new, isolated bridge network.

docker network create -d bridge my-net-d bridge: Specifies the network driver asbridge. Other drivers likeoverlay(for Docker Swarm) exist, butbridgeis for single-host setups.

-

Run Containers and Connect to the New

my-netNetwork:docker run -it --rm --name busybox1 --network my-net busybox sh--rm: Automatically removes the container when it exits.busybox: A very small image useful for testing.

Open a new terminal and run another container, connecting it to the same

my-netnetwork:docker run -it --rm --name busybox2 --network my-net busybox sh -

Verify Container Connection:

In a third terminal, list your running containers:

docker container lsYou should see

busybox1andbusybox2listed. -

Test Inter-Container Communication (DNS Resolution):

Go back to the terminal where busybox1 is running and ping busybox2 by its container name:

/ # ping busybox2 PING busybox2 (172.19.0.3): 56 data bytes 64 bytes from 172.19.0.3: seq=0 ttl=64 time=0.072 ms 64 bytes from 172.19.0.3: seq=1 ttl=64 time=0.118 ms ^C # Press Ctrl+C to stopAs you can see,

busybox2is resolved to its IP address (e.g.,172.19.0.3), and thepingis successful. This demonstrates that containers within the same custom network can communicate directly using their service names, thanks to Docker’s built-in DNS service.Similarly, from the

busybox2container, you canping busybox1:/ # ping busybox1 PING busybox1 (172.19.0.2): 56 data bytes 64 bytes from 172.19.0.2: seq=0 ttl=64 time=0.064 ms 64 bytes from 172.19.0.2: seq=1 ttl=64 time=0.143 ms ^C # Press Ctrl+C to stopThis confirms successful communication between

busybox1andbusybox2.

For multiple interconnected containers, Docker Compose is highly recommended. Docker Compose allows you to define and run multi-container Docker applications, simplifying networking, volume management, and service orchestration.

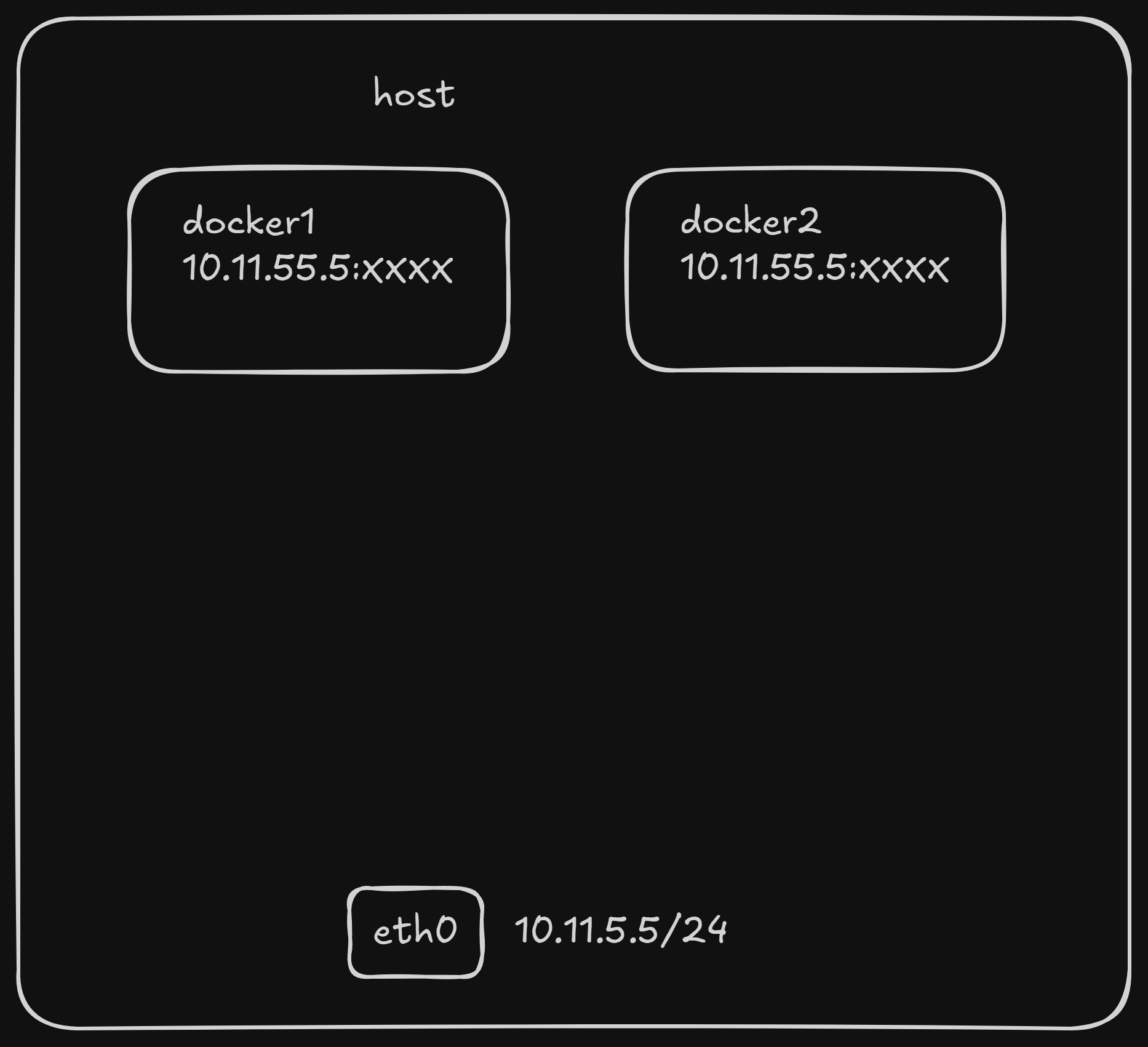

2. Host Mode

When a container is started with the host network mode, it does not get its own isolated Network Namespace. Instead, it shares the host machine’s Network Namespace. This means:

- The container will not have its own virtual network interfaces or unique IP address.

- It will directly use the host’s IP address and port space. If a service inside the container listens on port 80, it will be accessible on the host’s IP address at port 80, provided no other process on the host is already using that port.

- However, other aspects of the container, such as its filesystem, process list, and user namespace, remain isolated from the host.

Use Cases:

Host mode is useful for performance-critical applications or when you need the container to directly access network services on the host without any network address translation (NAT) overhead, such as when running a network monitoring tool or a high-performance proxy.

Visual Representation:

Demonstration:

-

Run Containers in Host Mode:

docker run -d --network host --name docker_host1 alpine sleep 3600 docker run -d --network host --name docker_host2 alpine sleep 3600Note: Running multiple containers in host mode that try to bind to the same port will result in port conflicts.

-

Inspect Container Network Configuration (inside the container):

docker exec -it docker_host1 shInside the

docker_host1container, execute:/ ip a / ip rYou will observe that the IP addresses and network interfaces listed are the same as those on the host machine, demonstrating that the container is sharing the host’s network stack.

Type

exitto leave the container’s shell.

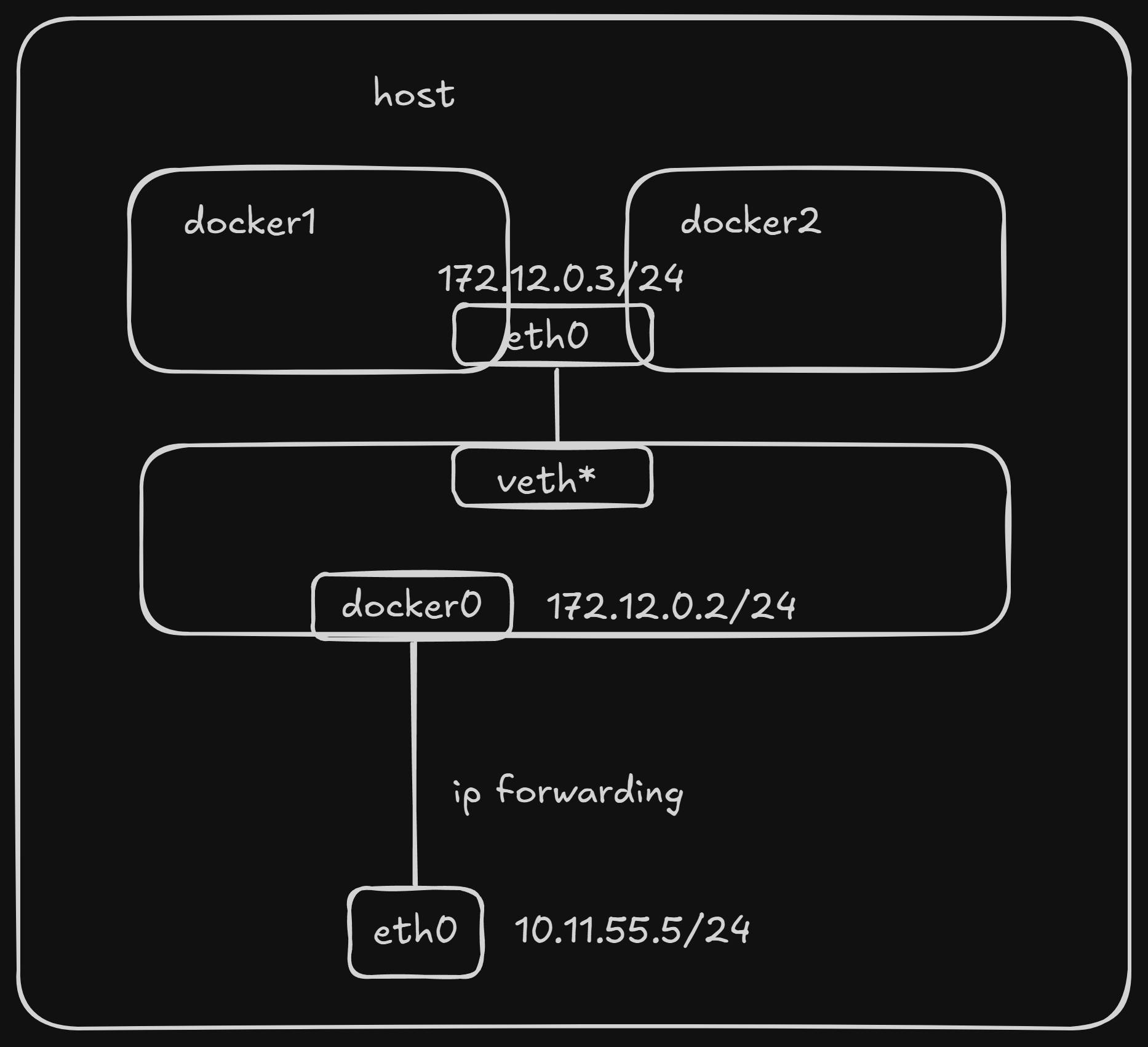

3. Container Mode (or container:NAME_OR_ID)

This mode specifies that a newly created container should share the Network Namespace of an already existing container, rather than sharing with the host.

- The new container will not create its own network interfaces or configure its own IP address.

- It will share the IP address, port range, and network configuration (e.g., DNS servers, routing table) of the specified existing container.

- The two containers, while sharing network resources, still maintain isolation in other aspects like their filesystem, process list, and user namespace.

- Processes in both containers can communicate with each other via the loopback interface (

lo).

Use Cases:

This mode is commonly used in “sidecar” patterns, where a helper container shares the network stack with a primary application container. For example, an Nginx reverse proxy might share the network namespace with a web application container to simplify configuration and inter-process communication.

Visual Representation:

Demonstration:

-

Start a “Base” Container (e.g., in bridge mode):

First, we need a container whose network stack will be shared.

docker run -d --name docker_bri1 alpine sleep 3600 -

Start a New Container in

containerMode, Sharingdocker_bri1’s Network:docker run -d --network container:docker_bri1 --name docker_con1 alpine sleep 3600 -

Inspect Network Configuration of Both Containers:

docker exec -it docker_con1 shInside

docker_con1, executeip aandip r. Note the IP address./ ip a / ip rType

exit. Now, do the same fordocker_bri1:docker exec -it docker_bri1 shInside

docker_bri1, executeip aandip r./ ip a / ip rYou will observe that

docker_con1anddocker_bri1have the exact same IP addresses and network interfaces, confirming they share the same network stack.Type

exitto leave the container’s shell.

4. None Mode

In none network mode, a Docker container has its own isolated Network Namespace, but Docker does not configure any network interfaces within it. This means:

- The container will have no network card, no IP address, and no routing information.

- It is completely isolated from the network, both external and internal.

- You would need to manually add network interfaces and configure IP addresses and routes within the container if you wanted it to have network connectivity.

Use Cases:

This mode is typically used for specialized containers that do not require any network access, or when you need full control over the container’s networking configuration and plan to set it up manually using other tools. It’s often used for security-critical environments where network isolation is paramount.

Visual Representation:

(Container is isolated and has no network connectivity by default.)

Demonstration:

-

Run a Container in None Mode:

docker run -d --network none --name docker_non1 alpine sleep 3600 -

Inspect Network Configuration (inside the container):

docker exec -it docker_non1 shInside the

docker_non1container, execute:/ ip a / ip rYou will see only the loopback interface (

lo) and no other network interfaces or routing entries, confirming the lack of network configuration.Type

exitto leave the container’s shell.

Further Topics: Cross-Host Communication

This guide focuses on single-host Docker networking. For Docker containers to communicate across different host machines, you would typically use advanced networking solutions like:

- Overlay Networks (Docker Swarm Mode): Built-in Docker Swarm capability that enables communication between containers running on different nodes in a Swarm cluster.

- Third-Party Container Network Interface (CNI) Plugins: Solutions like Calico, Flannel, or Weave Net provide more sophisticated networking capabilities, often used in larger container orchestration platforms like Kubernetes.

These topics, especially cross-host communication, are more advanced and are often covered in the context of container orchestration platforms like Kubernetes.